Part 1 in a multi-part series about analytical methods used in evaluations. All of the methods featured in this series appear in The Magenta Book Annex A.

We were recently inspired by The Magenta Book, the U.K. government’s comprehensive “guidance on what to consider when designing an evaluation”, to feature a series of analytical methods and how they work best in an evaluation. Contribution analysis, an analytical approach developed by John Mayne in the early 2000s to infer causality in program evaluations, is our first featured analytical method.

Contribution analysis, as defined in a white paper by INTRAC, is, “a methodology used to identify the contribution a development intervention has made to a change or set of changes.” Contribution analysis is flexible and does not require a baseline or control group to have been established at the start of an intervention in order to be effective. This method is likely to be most useful when evaluating interventions based on a relatively clear theory of change, rather than more experimental programs.

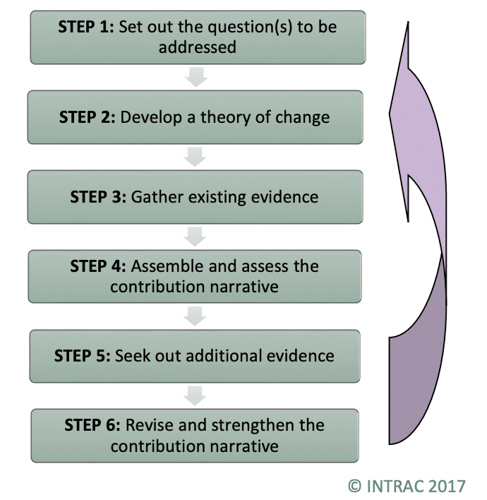

Contribution analysis includes six established steps:

According to Better Evaluation: “…Contribution analysis is not definitive proof, but rather provides evidence and a line of reasoning from which we can draw a plausible conclusion that, within some level of confidence, the program has made an important contribution to the documented results.”

Khulisa evaluated the USAID/South Africa Systems Strengthening for Better HIV/TB Outcomes project to determine contribution of different district-support models in increasing HIV/TB service coverage and reducing ART attrition. The evaluation found that models that added staff to DOH services (i.e. secondment of staff for direct services delivery) and mentored DOH staff (mentoring, roving clinical teams) were most strongly associated with improvements in clinical performance indicators.

Next week’s #EvalTuesdayTip will focus on another analytical method, Most Significant Change (MSC).